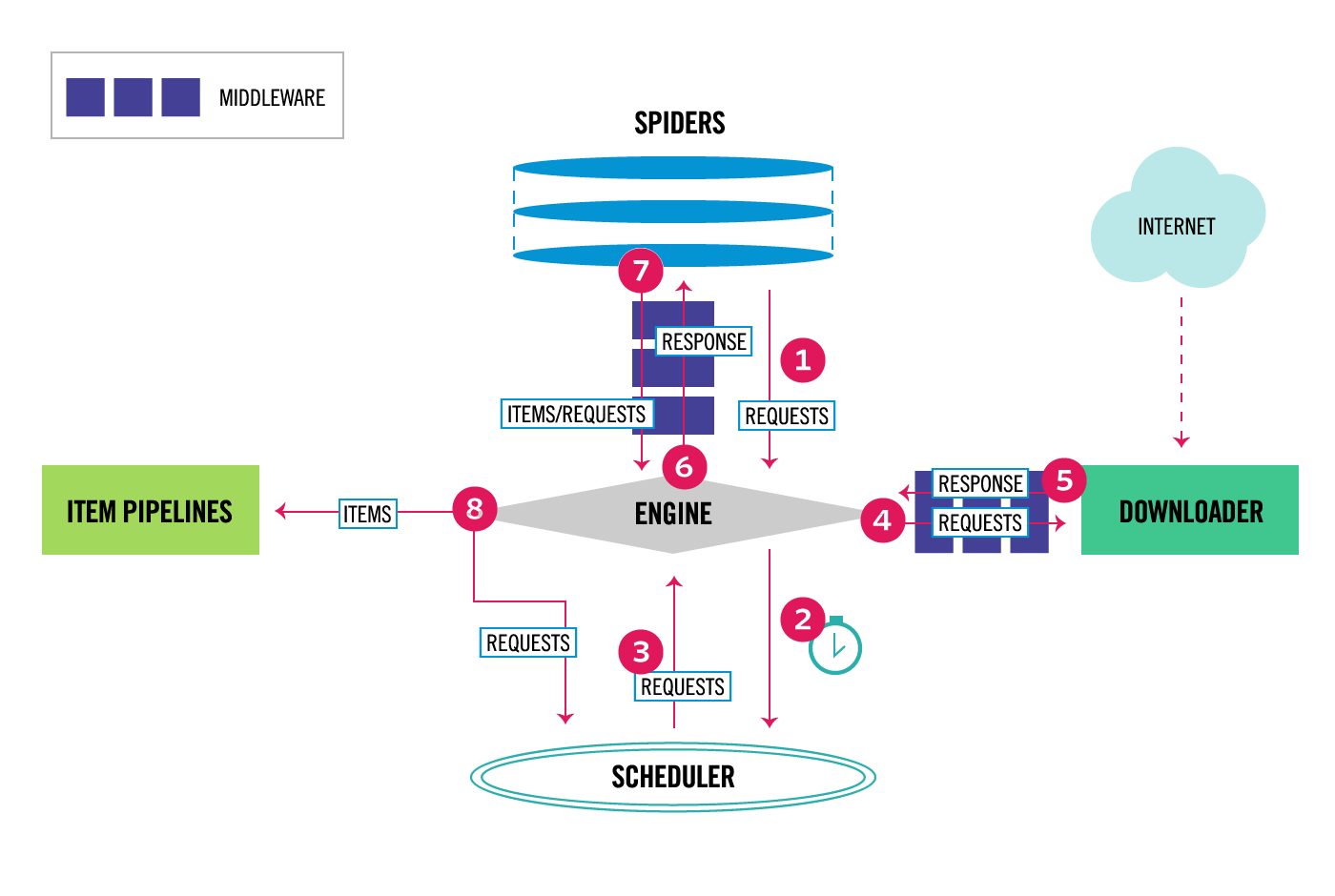

Scrapy 框架中的数据流

尽管文档中这样提到:Scrapy中的数据流由执行引擎控制,如下所示

- The Engine gets the initial Requests to crawl from the Spider.

- The Engine schedules the Requests in the Scheduler and asks for the next Requests to crawl.

- The Scheduler returns the next Requests to the Engine.

- The Engine

sends the Requests to the Downloader,

passing through the Downloader

Middlewares (see

process_request()). - Once the page finishes downloading the Downloader

generates a Response (with that page) and sends it to the Engine,

passing through the Downloader

Middlewares (see

process_response()). - The Engine

receives the Response from the Downloader

and sends it to the Spider

for processing, passing through the Spider

Middleware (see

process_spider_input()). - The Spider

processes the Response and returns scraped items and new Requests (to

follow) to the Engine,

passing through the Spider

Middleware (see

process_spider_output()). - The Engine sends processed items to Item Pipelines, then send processed Requests to the Scheduler and asks for possible next Requests to crawl.

- The process repeats (from step 1) until there are no more requests from the Scheduler.

但是具体到程序中是如何体现的呢?在项目运行时,控制台中就有输出提示信息。如果要更直观的体现,不妨在每步对应的函数中打印自己设置的提示信息。例如:在自己的项目管道中可以这样做

1 | class MyPipeline(object): |

项目运行后,就可以看见他们的输出顺序了:

1 | ------------ from_crawler -------------- |

了解框架的处理逻辑对我们编写高效代码是很有好处的。